ASSISTANT PROFESSOR OF PSYCHOLOGY

PSYCHOLOGY as a science is roughly one hundred years old. It was born testing Weber's Law, a quantitative relationship between sensory discrimination and stimulus intensity, and today all but a few marginal areas employ some branch of mathematics. In the last fifteen years there has been an acceleration in the volume and quality of psychological research. In several areas, most notably in the study of learning, this has led to the discovery of powerful variables and to greater experimental control of behavior. Under these improved breeding conditions a variety of applications of mathematics have been produced. Some are isolated equations or new statistical tests, others are fairly general theories. Some are impressive, with a string of successful predictions to their credit; others are tentative axiomatizations of loose verbal postulates, and do little more than check on consistency. This article presents examples of three rather different types of application: (1) measuring a new dimension, (2) fitting a functional relation, and (3) a simple stochastic model for learning.

Measuring a New Dimension

N. Wiener and C. Shannon developed Information Theory for the study of communication systems. Its main contribution to psychology has been the concept of uncertainty as a dimension of the environment and of behavior.

Any organism produces its behavior in a stimulating environment. Information theory focuses on (1) a set of possible stimuli that may impinge on the organism, (2) the possible behaviors the organism may produce, and (3) the transmission of information between the two. The set of possible stimuli is characterized by their number and by their probabilities. The more stimuli there are the more "uncertainty" there is as to which will actually occur. For a given number of equally probable stimuli the function log2 N measures uncertainty in units which give the mean number of "yes-no" questions one would have to ask to find out which stimulus was coming at any time. This unit is the famous "bit of information." For example, eight stimuli would have three bits of uncertainty and require three questions. Perfect transmission of a stimulus with probability P= 1/N could then be said to convey log2 1/P bits of information, or to be worth that many yes-no questions. For a set of stimuli with unequal probabilities the average uncertainty, or the expected amount of information conveyed per stimulus, equals ∑ P log 1/P.

The uncertainty of a fixed number of stimuli will be at a mayimnm when all stimuli are equiprobable and occur independently from time to time. Any variation in probability or any sequential dependency in stimulus occurrence will reduce uncertainty relative to its maximum possible value. One minus the ratio of actual uncertainty to maximum possible uncertainty is a measure of the redundancy of the set of stimuli. When this measure is applied to the set of possible behaviors it is called an index of behaviorial stereotypy. Highly redundant stimulation or highly stereotyped behavior conveys little information and is relatively predictable. In this sense as in others a political speech is more redundant and less informative than fiction.

The uncertainty measure can be applied to many different kinds of stimuli and thus has the potential for entering into highly general laws. So far only scattered studies have employed it, but some interesting empirical results have been found, samples of which follow: (1) Written English is at least 50% redundant. The amount of information conveyed by the average letter of the English alphabet seems to be roughly 2 bits, as compared with a maximum possible of 4.7 bits for 26 stimuli. While high redundancy is inefficient under ideal transmitting conditions it has been found to be desirable when "noise" or random interference with communication is present. In fact, redundancy higher than 90% is deliberately employed in communication where error is costly and noise is great (e.g., an airport control tower). (2) The time taken to recognize a word is a decreasing linear function of the information transmitted by the word, i.e., more frequent words are recognized more quickly. (3) A given amount of redundant text is easier to remember than the same amount of text with more information. Some of the facilitating effects on retention of gross features of stimuli such as "organization and meaning," which clearly involve sequential dependencies, may be more adequately predicted from measures of redundancy. (4) One of the differences between human and animal behavior in certain simple situations is that human behavior shows dependency on its own past farther back in time than that of animals. This is reflected in larger redundancy or stereotypy of behavior. Contrary to common beliefs, in these cases human behavior conveys less information and is apriori more predictable than that of animals.

Fitting a Functional Relation

This simple type of application is quite common: to select from some class of simple functions one which adequately describes the relationship between two variables. As an example we present Clark Hull's basic equation for the learning process.

All curves of learning deal with behavioral variables such as reaction time, per cent correct choices, and rate of responding as a function of time or trials. They usually show decreasing changes and eventually stabilize at some asymptotic level. On this basis and on analogy to biological growth functions and simple chemical reactions, earlier theorists had proposed that the rate of change of performance with time or trials should be proportional to the amount of change yet to take place. Seeking a curve with these properties Hull chose the exponential growth function H = M (1-10−iN), where H is "habit strength," N is trials, M is asymptotic performance, and i reflects the rate of learning. After he succeeded in fitting a variety of learning data with this function he tried to identify experimental variables affecting M and i. Several were found to affect M, for example the degree of hunger and magnitude of reward, but the constant i could only be said to characterize individual subjects under nearly constant conditions. While Hull's theory dealt with many other variables, this basic equation played a central role. By combining this postulate with several others he produced the first systematic effort of any scope to build a quantitative learning theory.

A Simple Stochastic Model for Learning

One of Hull's other postulates was that an autonomous "oscillatory" process intervened between habit and behavior. He assumed that, from moment to moment, this produced random changes in effective habit strength for each individual. Its exact value at any time was unpredictable although the variations were assumed to follow the normal probability distribution. In this sense Hull was the first to use a probabilistic approach in learning. However, the number of empirical constants and over-all complexity of Hull's system did not lend themselves to rigorous prediction, and later statistical models did not build on his work.

Ten years ago W. K. Estes, and R. Bush and F. Mosteller began to view learning as a stochastic process. Their first step was to treat behavioral probabilities directly rather than through an underlying deterministic growth process. Other response measures such as reaction time and rate were then handled by applying probability theory to the basic "probability of response" variable. Estes began by analyzing the details of stimulation more closely. He proposed that the stimulus situation confronting an organism is composed of a number of elements and is constantly changing. On any one trial the organism faces a sample of the total possible stimulus elements and executes one of a mutually exclusive and exhaustive set of responses available to him (including "no action"). Every stimulus element is assumed to be in a state of "connection" to one and only one response, in the sense that, if it alone were present, that response would surely occur. In the presence of multiple stimulation, however, the probability of any response is equal to the proportion of stimulus elements connected to it.

Learning is a procedure whereby stimuli are disconnected from one response and connected to another, whereupon the second response gains in probability. The events which cause this change in connection are called "reinforcements" of the second response. Many reinforcements are rewards in the common sense, but others are not: telling a subject "correct" for pushing one of two buttons will reinforce that response, but so will telling him "wrong" for pushing the other.

Learning is gradual since it takes time for all the potential stimuli in any situation to become connected to the correct response. The rate of learning depends on the proportion of potential stimuli which are actually sampled by the subject on each trial—a key constant which is labeled ø.

In the case of two possible responses where one is always reinforced and the other never, the gain in probability for the first response on each trial is ø(1 — P). The learning curve this implies is P = 1 - (1 - Po)(1 - ø)N-1, where Po is the initial probability of the response and Nis trials. This curve has similar properties to Hull's basic equation and enables Estes to handle the simple case of 100% reinforcement.

But the most striking application of statistical learning theory - one which earlier theorists could not predict, and which has aroused the interest of statisticians, economists, and others - is to the case where each response is reinforced only part of the time. For example, let one response be reinforced 75% of the time and the other 25%. The "rational" solution to this problem for a subject who wishes to maximize the number of reinforcements he receives, is to give the first response all the time. But statistical learning theory predicts that he will eventually give it just 75% of the time - which is what happens. The realization that man does not maximize his profits in many choice situations is just one more blow in a long series which have been dealt by mathematical reasoning to moribund intuitive methods in the science of behavior.

Suggested Reading

Bush, R. and Mosteller, F., Stochastic Models for Learning. New York: Wiley, 1955.

Estes, W. K., "The Statistical Approach to Learning Theory," in Koch, S. (ed.) Psychology: A Study of a Science, Study 1, Vol. 2. New York: McGraw-Hill, 1959.

Hull, Clark L. Principles of Behavior. New York: Appleton, 1943.

Luce, R. Duncan (ed.) Developments in Mathematical Psychology. Glencoe, Ill.: The Free Press, 1960.

The modern social scientist increasingly needs to know something of higher mathematics if he is to keep up with his field.Psychology especially shows this development, and the editorshave asked Professor Butler to present a few examples of howthis new alliance works.

View Full Issue

View Full Issue

More From This Issue

-

Feature

FeatureA Dollop of Yankee Talk

February 1961 -

Feature

FeatureCampus Cosmopolitans

February 1961 -

Feature

FeatureNow They're Flicks, Not Movies, But The Nugget Still Carries On

February 1961 By GEORGE O'CONNELL -

Feature

FeatureDr. Myron Tribus of UCLA Named Dean of Thayer School

February 1961 -

Feature

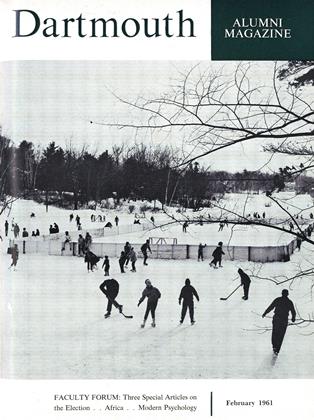

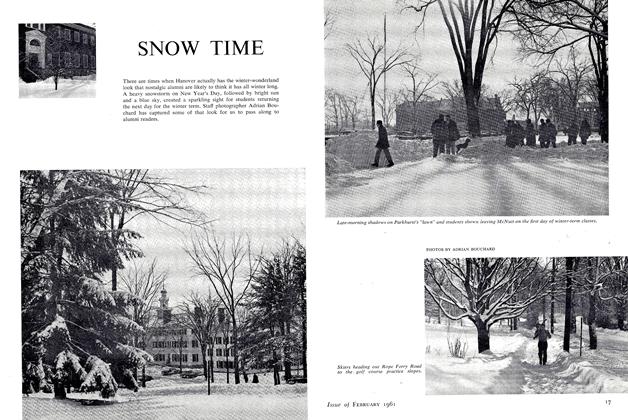

FeatureSNOW TIME

February 1961 -

Article

ArticleProblems of Land Development in the New African Nations

February 1961 By BARRY N. FLOYD,