A philosopher explains why we shouldn't trust computers.

Once upon a time in a story in Plato's Republic, a shepherd found a ring and discovered that when he wore it and turned the collet of the ring inside his hand, he could become invisible. Turning the collet back made him visible again. Immediately seeing the power of this ring, the shepherd set off for the royal court, where he seduced the queen, killed the king, and captured the kingdom. Plato's fictional narrator Glaucon uses this story of the Ring of Gyges to challenge Socrates to explain why people should be moral if they can act unjustly and get away with it.

Had computers been part of Plato's world, he might have had the shepherd discover the hidden powers of computers. Computing is a contemporary Ring of Gyges, presenting ethical challenges and raising the specter of new kinds of invisibility.

Though computing does not literally make people invisible, it can effectively hide their actions. A clever agent can move surreptitiously within a computer system. Consider Stanley Mark Rifkin, a computer consultant for Security Pacific National Bank, who secretly used computer codes to transfer $10.2 million to a Swiss bank account and eventually converted the money to diamonds. Had he not bragged about his accomplishments, he might never have been caught. Consider, too, the case of a group of New Yorkers who reprogrammed their mobile phones to avoid $40,000 in phone charges. Or the Hertz carrental company, whose corporate computer allegedly was programmed to produce two estimates for damaged rental cars—a low rate for repair and a high rate for customers and insurers. Of course, embezzlement, fraud, and scams were around long before computers, just as seduction, murder, and conquest were around before Plato's shepherd discovered the ring. Computing doesn't cause unethical behavior, but may make it irresistibly tempting to some people.

The Gyges effect is enhanced by the fact that the consequences of computer actions are often remote; frequently, to act by computer is to act at a psychological and physical distance from the victim. The consequences of an action may seem less visible to the actor and perhaps less real. For many people, computing produces an ethical blind spot. Breaking into a computer over a phone link and scanning personal files is regarded by some not as an invasion of privacy but as a prank. Releasing a computer virus into a network is not regarded as an act of sabotage but as a way of testing another computer's security system. Routine and widespread theft of software is not viewed as stealing but simply making a few additional copies. People who would never look into another's filing cabinet without permission or intrude into a stranger's house to test the security system or steal from a store are sometimes willing to perform comparable acts in a computing environment without any sense of the ethical dimension of their actions.

Yet another kind of invisibility factor raises questions about the wisdom of trusting complex and potent computer programs. Some programs are so complicated that no one person or group of people really understand how such software will behave under every set of circumstances. A small imperfection in the logic of such a program may be impossible to see, but its effects may be devastating. Such was the case in 1990 when AT&T's long-distance system crashed. Just one wayward command in a two-million-command program for routing calls shut down one of the nations's major phone networks for hours. In another case, at least two workers died in 1986 when a computer bug caused a linear accelerator to raise a metal plate and unleash lethal doses of radiation.

The invisibility of software bugs is frustrating because we can see software. We can list the computer instructions and read them. Yet even after carefully reading all of the computer instructions of a large program, we cannot be certain how the program will function nor are we always sure about the environment in which the software will function. Even if a piece of complex software contains no bugs and the computer that runs it does not malfunction, we may not anticipate the results of using the software. Consider the computerized stock market. Whatever the initial cause of the 1987 Black Monday crash might have been, there is little doubt that computers greatly amplified the result. The computers did exactly what they were programmed to do: they protected investors by trading vast sums of money in very short periods of time (a hundred million dollars can be exchanged in a matter of minutes). Unseen computer decision-making—yet another example of computer invisibility-accelerated a process beyond anticipated scenarios.

This kind of invisibility factor is particularly serious in the context of computerized weapons. The effectiveness of smart weapon systems was decisively demonstrated in the recent war with Iraq. We had smart Cruise missiles; Iraq had dumb SCUDs. But what about the future? Computerized weapons will be improved. They will become a necessity for any nation wishing to prove its stature. This mix of human emotions, politics, and sophisticated computerized weapons should give us pause. Will individuals or nations that can make accurate long-range strikes without detection or visual confirmation of targets be more likely to wage terrorism or war? Consider how easily and effectively an American warship using highly computerized weapon systems shot down an Iranian airliner.

And finally, there is the nightmare scenario for an evermore complex generation of computer weapons. Because computers react faster than humans, it is militarily advantageous to computerize more and more of the decision-making within the weapon systems themselves. It will be irresistible not to let computers play a larger role in controlling weapon systems. Of course, humans will be left in charge of these weapons, but will we really be in charge? Weapon exchanges, perhaps haps triggered accidentally, might accelerate at computer speeds like those we witnessed in the stock market debacle: beyond human comprehension or control.

He's got a hard disk named Socrates. He's designed software that allows students to manipulate the overlapping circles of Venn diagrams and write proofs to improve their understanding of logic. But there's more to Philosophy Professor James Moor's computer-ease than merely keeping up with the latest high-tech teaching methods. In utilizing computers to demonstrate logic and in pondering the ethical sides of the computer revolution, Moor is extending the centuries-long link between philosophy, mathematics, and logic.

"Pascal, the seventeenth-century mathematician, was also a philosopher. He invented a computer-like adding machine," Moor explains before rattling off the names of other philosopher-mathematicians, such as Leibnitz and Russell. "Descartes thought of humans as machines with minds and argued that mere machines will never think. Hobbes took an opposing view, arguing that thinking—calculation is a matter of reckoning. That was the conceptual ancestor of today's artificial intelligence movement."

Moor got his start in mathematics, majoring in it at Ohio State, then moving to a master's in philosophy at the University of Chicago. In his Indiana University doctoral studies in the history and philosophy of science he explored the age-old question of what the human mind is like. The jump from what the mind can do to what machines can do was a logical extension of this inquiry.

"Computers have been successful in well-defined activities," says Moor. "Computers play chess well, but will computers ever succeed in less well defined activities? For example, could a computer be a judge? My students often say that they would prefer a computer judge if they were innocent but a human judge if they were guilty."

Since coming to Dartmouth in 1972, Moor has taken advantage of what he calls a distinguishing feature of the College: its advanced computer network. The philosophy-teaching software he originated gets a regular workout from students here and around the world recently his Venn diagram program was translated into French. Moor's work on the ethical and social implications of computers also has a life beyond Dartmouth. He hopes that discussion of such issues will result in improved public policy.

Computers are our friends—up to apoint—according to PhilosophyProfessor James Moor.

View Full Issue

View Full Issue

More From This Issue

-

Feature

FeatureThe Higher-Ed Book Biz

June 1991 By Robert Sullivan '75 -

Feature

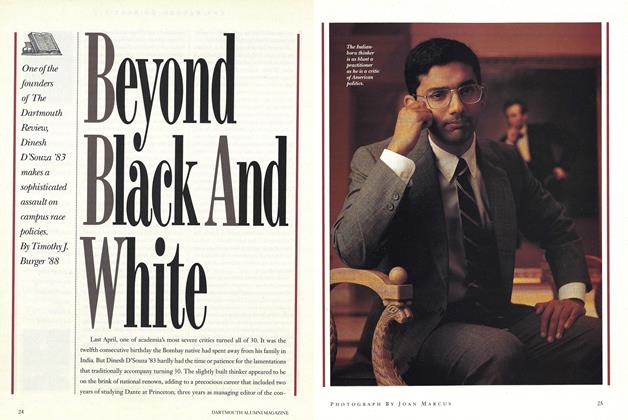

FeatureBeyond Black And White

June 1991 By Timothy J. Burger '88 -

Feature

FeatureBUG SLAYER

June 1991 By Nancy Freiberg -

Article

ArticleDR. WHEELOCK'S JOURNAL

June 1991 By "E. Wheelock" -

Article

ArticleThe Alumni Awards

June 1991 -

Article

ArticleORIGINALS AND COPIES

June 1991 By James O. Freedman

Karen Endicott

-

Article

ArticleWhy the Novel Matters

May 1994 By Karen Endicott -

Article

ArticleCuring Fake Patients

OCTOBER 1994 By Karen Endicott -

Article

ArticleThe World's a Game

April 1995 By Karen Endicott -

Article

ArticleMemory and Catastrophe

June 1995 By Karen Endicott -

Article

ArticleCopper Crown

October 1995 By Karen Endicott -

Article

ArticleWHAT the ELDERS WROTE

October 1995 By Karen Endicott